Gathering and analyzing data has been the craze of business for quite some time now. Yet, too often, the former takes hold of companies at such strength that no care is given to the thought of utilizing data. There’s a reason we had to invent a name for this phenomenon – “dark data.”

Unfortunately, data is often gathered without a good reason. It’s understandable – a lot of internal data is collected by default. The current business climate necessitates using many tools (e.g., CRMs, accounting logs, billing) that automatically create reports and store data.

The collection process is even more expansive for digital businesses and often includes server logs, consumer behavior, and other tangential information.

Building a (Big) Data Pipeline the Right Way

Unless you’re in the data-as-a-service (DaaS) business, simply collecting data doesn’t bring any benefit. With all the hype surrounding data-driven decision-making, I believe many people have lost sight of the forest for the trees. Collecting all forms of data becomes an end in itself.

In fact, such an approach is costing the business money. There’s no free lunch – someone has to set up the collection method, manage the process, and keep tabs on the results. That’s resources and finances wasted. Instead of striving for the quantity of data, we should be looking for ways to lean out the collection process.

Humble Beginnings

Pretty much every business begins its data acquisition journey by collecting marketing, sales, and account data. Certain practices such as Pay-Per-Click (PPC) have proven themselves to be incredibly easy to measure and analyze through the lens of statistics, making data collection a necessity. On the other hand, relevant data is often produced as a byproduct of regular day-to-day activities in sales and account management.

Businesses have already caught on that sharing data between marketing, sales, and account management departments may lead to great things. However, the data pipeline is often clogged, and the relevant information is only accessed abstractly.

Often, the way departments share information lacks immediacy. There is no direct access to data; instead, it’s being shared through in-person meetings or discussions. That’s just not the best way to do it. On the other hand, having consistent access to new data may provide departments with important insights.

Interdepartmental Data

Rather unsurprisingly, interdepartmental data can improve efficiency in numerous ways. For example, data on the Ideal Customer Profile (ICP) leads between departments will steer to better sales and marketing practices (e.g., a more defined content strategy).

Here’s the burning issue for every business that collects a large amount of data: it’s scattered. Potentially useful information is left all over spreadsheets, CRMs, and other management systems. Therefore, the first step should be not to get more data but to optimize the current processes and prepare them for use.

Combining Data Sources

Luckily, with the advent of Big Data, businesses have been thinking through information management processes in great detail. As a result, data management practices have made great strides in the last few years, making optimization processes a lot simpler.

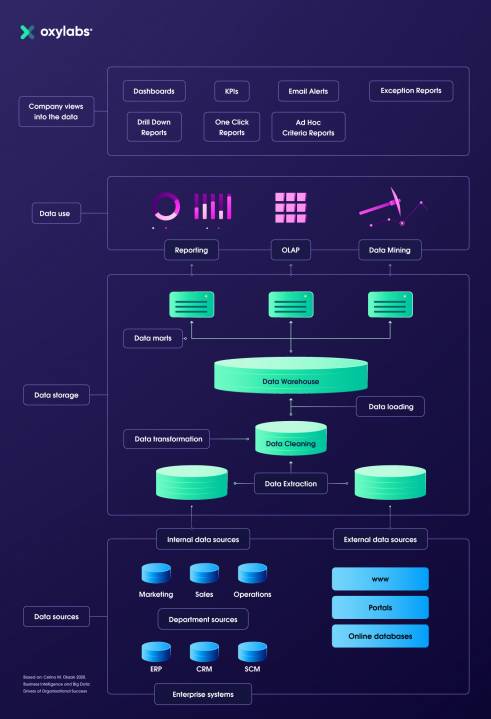

Data Warehouses

A commonly used principle of data management is building a warehouse for data gathered from numerous sources. But, of course, the process isn’t as simple as integrating a few different databases. Unfortunately, data is often stored in incompatible formats, making standardization necessary.

Usually, data integration into a warehouse follows a 3-step process – extraction, transformation, load (ETL). There are different approaches; however, ETL is most likely the most popular option. Extraction, in this case, means taking the data that has already been acquired from either internal or external collection processes.

Data transformation is the most complex process of the three. It involves aggregating data from various formats into a common one, identifying missing or repeating fields. In most businesses, doing all of this manually is out of the question; therefore, traditional programming methods (e.g., SQL) are used.

Loading — Moving to the Warehouse

Loading is basically just moving the prepared data to the warehouse in question. While it’s a basic process of moving data from one source to another, it’s important to note that warehouses do not store real-time information. Therefore, separating operational databases from warehouses allows the former to separate as a backup and avoid unnecessary corruption.

Data warehouses usually have a few critical features:

- Integrated. Data warehouses are an accumulation of information from heterogeneous sources into one place.

- Time variant. Data is historical and identified as from within a particular time period.

- Non-volatile. Previous data is not removed when newer information is added.

- Subject oriented. Data is a collection of information based on subjects (personnel, support, sales, revenue, etc.) instead of being directly related to ongoing operations.

External Data to Maximize Potential

Building a data warehouse is not the only way of getting more from the same amount of information. They help with interdepartmental efficiency. Data enrichment processes might help with intradepartmental efficiency.

Data enrichment from external sources

Data enrichment is the process of combining information from external sources with internal ones. Sometimes, enterprise-level businesses might be able to enrich data from purely internal sources if they have enough different departments.

While warehouses will work nearly identical for almost any business that deals with large volumes of data, each enrichment process will be different. This is because enrichment processes are directly dependent on business goals. Otherwise, we would go back to square one, where data is being collected without a proper end-goal.

Inbound lead enrichment

A simple approach that might be beneficial to many businesses would be inbound lead enrichment. Regardless of the industry, responding quickly to requests for more information has increased the efficiency of sales. Enriching leads with professional data (e.g., public company information) would provide an opportunity to automatically categorize leads and respond to those closer to the Ideal Customer Profile (ICP) faster.

Of course, data enrichment need not be limited to sales departments. All kinds of processes can be empowered by external data – from marketing campaigns to legal compliance. However, as always, specifics have to be kept in mind. All data should serve a business purpose.

Conclusion

Before treading into complex data sources, cleaning up internal processes will bring greater results. With dark data comprising over 90% of all data collected by businesses, it’s better at first to look inwards and optimize the current processes. Including more sources will exile some potentially useful information due to inefficient data management practices.

After creating robust systems for data management, we can move on to gathering complex data. We can then be sure we won’t miss anything important and be able to match more data points for valuable insights.

Image Credit: rfstudio; pexels; thank you!

The post Building a (Big) Data Pipeline the Right Way appeared first on ReadWrite.

Comentarios recientes